FAIR Symposium Tackles Online Toxicity, AI in Recruiting

The rapid development of large language models (LLMs) has fundamentally reshaped the landscape of artificial intelligence, with particular impacts in the realms of content moderation, automated decision-making, and ethical AI deployment. As these models scale and become further integrated into our everyday lives, their potential for bias propagation and unintended harmful outputs has become an urgent concern for researchers and businesses alike.

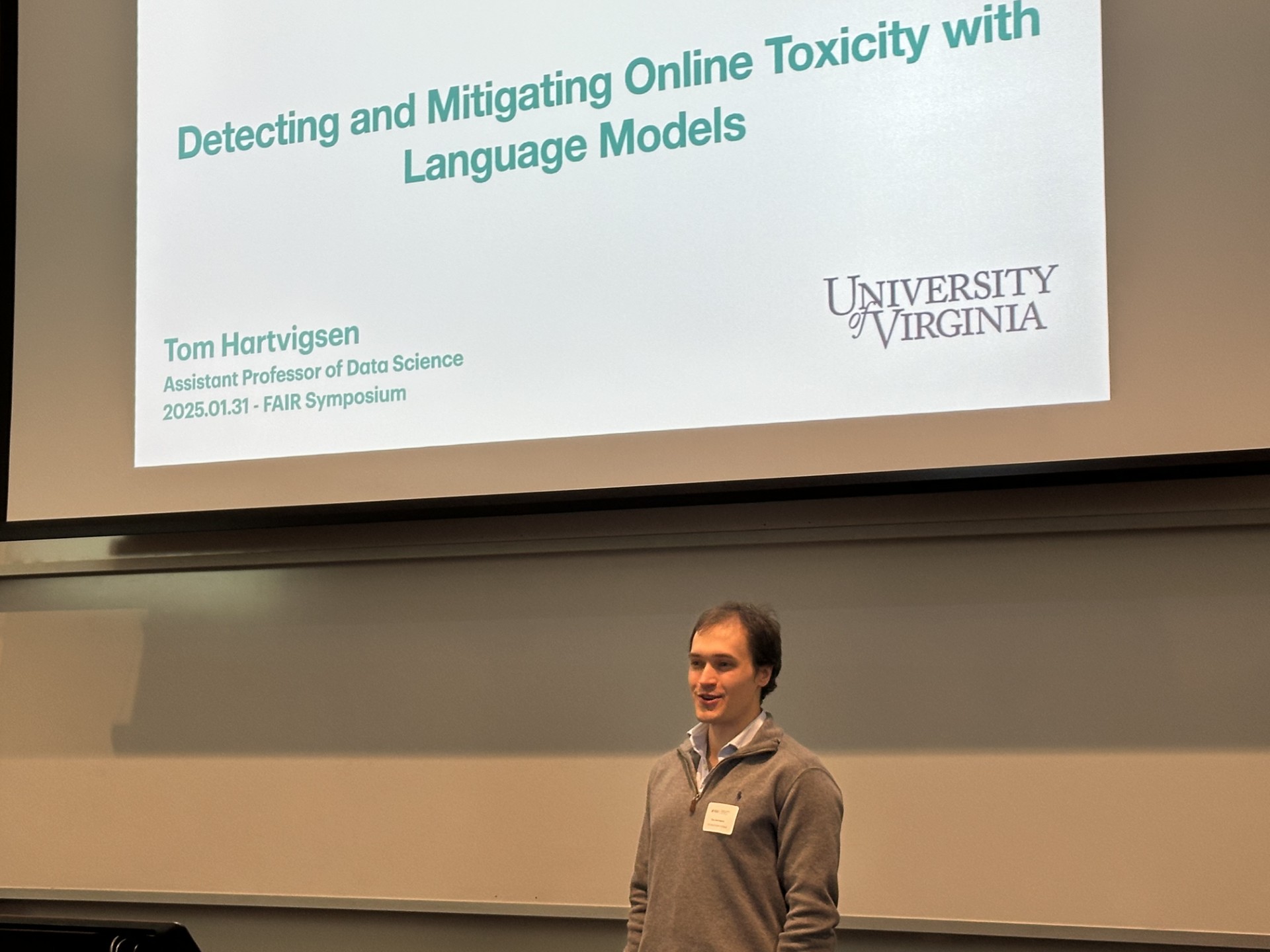

The LaCross Institute for Ethical Artificial Intelligence in Business recently convened at the University of Virginia Darden School of Business for the 2025 Fellowship in AI Research (FAIR) Symposium to examine the complexities of AI in two specific business applications: content moderation and recruiting. The half-day event brought together faculty, researchers, and industry experts to celebrate the prior year’s fellowship recipients, Tom Hartvigsen and Mona Sloane from the School of Data Science, and to hear what insights they and their teams had gleaned from a year of research.

Session 1: Detecting and Mitigating Online Toxicity with Language Models

This session was led by Hartvigsen, assistant professor of data science, in conjunction with Steven Johnson, an assistant professor at UVA’s McIntire School of Commerce, and Maarten Sap of Carnegie Mellon University, along with a team that included postdoctoral researcher Youngwoo Kim and Ph.D. students Guangya Wan and Miles Zhou from the School of Data Science. They examined the challenges associated with training LLMs to detect and mitigate online toxicity, centering on the limitations of existing frameworks, particularly their difficulty in distinguishing harmful content from legitimate identity mentions.

Hartvigsen emphasized the need for AI systems that can adapt to evolving definitions of toxicity while preserving the integrity of online discourse. He noted that ChatGPT-3 was originally trained on approximately 10% of all human-written text at launch, but that training included a large portion of old Reddit data. One of the early problems it faced was mistaking identity mentions as signals of hate.

“In their training data, when there were mentions of different identity groups, those were often attacks against those identity groups,” Hartvigsen said. “And so, the models would learn, ‘Ah, when I see a mention of an identity group, that is the signal of hate.’”

Because the training data for LLMs often comprise this kind of scraped internet content, including blogs, social media, and even government archives, it makes them highly versatile yet susceptible to inherited biases. Hartvigsen said that, as the content training of the models continues to grow, so, too, do the tools that will give humans the power to moderate and efficiently monitor these online conversations. He said he’s excited about the future of LLMs to serve as cost-effective and nuanced content moderation tools to intercept these biases.

Johnson made the point that content moderation is essential to the user value proposition, as social media is key in modern society for things like self-expression, social connections, and accessing high-quality information. When you decide what platform to use, you want to be in an environment that is not full of attacks and hate. Because of that, there needs to be guardrails in place to make users comfortable expressing their views. Therefore, it is also essential to the advertiser value proposition on platforms like Meta Inc., which, based on revenues, is essentially a digital advertising firm.

“Advertisers are concerned that they don't want their brands associated with toxic content,” Johson said. “If you're trying to sell a really feel-good product, you don't want that right next to a really violent video.”

The Challenges of Bias in AI Content Moderation

A significant impediment to content moderation is the cost. Kim noted that setting up the taxonomy for these systems, defining market risk, and then the even heavier lift of training the models can be very expensive for companies. Ideally, to keep costs lower, LLMs would be deployed just in testing and by adjusting prompts to change the behavior and output without repeating the training process.

Content moderation on a platform like Reddit presents unique challenges due to its decentralized governance model, with volunteer moderators enforcing varying subreddit-specific norms. Kim said there are more than 60,000 volunteer moderators who are actively working each day. Since written moderation policies often differ from actual enforcement, AI models trained solely on explicit rules failed to capture real-world moderation patterns. To make moderators’ jobs easier, the hope is to make models criteria-aware based on existing subreddit rules and providing high-quality input — both negative samples and positive — to fine tune the data.

Another significant issue in automated moderation is bias in filtering identity-based language. Early moderation systems disproportionately flagged African American English (AAE) dialect as toxic, reinforcing systemic biases instead of mitigating them. Context-dependent moderation remains a major hurdle, as AI struggles to differentiate between harmful speech and legitimate discussions, satire, or reclaimed slurs.

Researchers Wan and Zhou found that models perform significantly worse when processing AAE as compared to standard American English (SAE) dialect. When translating multiple-choice reasoning tasks into both dialects, models consistently produced fewer correct responses and less consistent explanations for AAE inputs. Furthermore, linguistic analysis showed that explanations for AAE prompts were often simpler, contained more perceptual and social language, and exhibited greater uncertainty, as indicated by words like “maybe” or “possibly.”

These discrepancies likely stem from data representation imbalances, as AI training data overwhelmingly favor SAE. While some rule-based prompts mitigate bias, preliminary findings suggest these interventions only provide partial improvements. The persistence of bias across AI systems highlights the pressing need for dialect-inclusive datasets, bias-aware training methodologies, and improved transparency in model decision-making. As AI becomes more integrated into decision-making processes, ensuring linguistic equity will be critical for fostering fairness and trust in AI applications.

Session 2: New Frontiers of Innovation and Accountability in AI Recruiting

In this interactive session, UVA Assistant Professor of Data Science and Media Studies Mona Sloane presented findings from her research on the increasing role of AI in talent acquisition. As part of the New Frontiers of Innovation and Accountability in AI Recruiting project, the Sloane Lab team has conducted more than 100 interviews and compiled a database of 3,000 HR tech companies to assess how AI is integrated into recruiting processes. A key takeaway from the discussion was that, contrary to prevailing narratives, AI is not widely used for automated candidate screening. Instead, many recruiters continue to rely on traditional Boolean searches over AI-generated candidate rankings, citing a lack of transparency in AI decision-making.

Despite the proliferation of automated screening technologies, concerns persist regarding algorithmic discrimination and the lack of clear regulatory frameworks to govern AI’s role in employment decisions.

One major concern is bias in AI-driven candidate evaluation, where models trained on historical hiring data may reinforce existing prejudices. Studies have shown that AI hiring systems may unintentionally prioritize candidates from overrepresented demographics, disadvantaging qualified applicants from underrepresented backgrounds. For instance, an infamous case involved Amazon building a model to predict the future success of job candidates. The model was trained with the CV data of the most successful people at the company, and therefore it disproportionately favored white-sounding male names. Similar risks extend to racial, socioeconomic, and educational biases, where AI models learn patterns that may perpetuate workplace inequality.

Transparency remains a key barrier to AI adoption in recruitment. Many AI-driven hiring platforms operate as black boxes, offering little insight into how candidate rankings are determined. This lack of interpretability has led to hesitancy among HR professionals, as employers face increasing legal and ethical scrutiny over the fairness of automated hiring decisions. Regulatory developments in the U.S. and Europe are at the nascent stages of addressing these concerns, with recent laws requiring greater disclosure of AI use in hiring and compliance with anti-discrimination measures.

To help improve accountability, the Sloane Lab researchers are exploring frameworks for explainable AI in recruiting that can provide greater clarity into how models evaluate candidates. With this project, the team is looking to close an existing large research gap by creating a publicly accessible database. Sloane said the aim is to enhance transparency by cataloging recruiting AI tools, and thereby helping practitioners make better-informed decisions in procurement and creating more visibility at the intersection of AI recruiting and the labor market for job candidates as well.

“We want to provide this database so that we can create a space in which auditing of these systems is easier because we know what they are, what kind of products are out there, what assumptions they have, what training data they use, and so on,” Sloane said.

Future Directions in AI Ethics and Governance

As AI models continue to advance, researchers stressed the importance of developing governance mechanisms that balance innovation with accountability. Key areas for future exploration include:

- Enhancing dataset diversity to reduce bias in corporate training.

- Improving explainability in AI decision-making to foster trust in automated systems.

- Integrating participatory AI frameworks, ensuring that AI serves as a tool to empower rather than replace human decision-makers.

The discussion underscored the dual role of AI in both enabling and challenging ethical business practices. As companies increasingly integrate LLMs into their operations, ensuring that these systems align with equitable, transparent, and human-centric principles will be imperative to their long-term success.